Training Inception Model: Difference between revisions

No edit summary |

|||

| (10 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

==Training your custom inception model== | ==Training your custom inception model== | ||

This tutorial is based on Tensorflow v1.12 and Emgu TF v1.12. | |||

Follow [https://www.tensorflow.org/tutorials/image_retraining this tensorflow tutorial] to retrain a new inception model. | Follow [https://www.tensorflow.org/tutorials/image_retraining this tensorflow tutorial] to retrain a new inception model. | ||

'''Note: The above link has been removed from tensorflow website and the new tutorial based on Tensorflow v2.x is available [https://www.tensorflow.org/hub/tutorials/tf2_image_retraining here]. If you follow Tensorflow v2.x tutorial you will have a "saved_model" format that will need to be loaded differently than the tutorial code below.''' | |||

You can use the flower data from the tutorial, or you can create your own training data by replacing the data folder structures with your own. If you follows the tutorial for retraining, you should now have two files: | You can use the flower data from the tutorial, or you can create your own training data by replacing the data folder structures with your own. If you follows the tutorial for retraining, you should now have two files: | ||

| Line 7: | Line 11: | ||

==Optimize the graph for inference== | ==Optimize the graph for inference== | ||

We would like to optimized the inception graph for inference. | We would like to optimized the inception graph for inference. | ||

First we need to install bazel. Looking at [https://www.tensorflow.org/install/source this page] we know that the official tensorflow 1.12.0 is built with bazel 0.15.0. We can download the bazel 0.15.0 release [https://github.com/bazelbuild/bazel/releases/tag/0.15.0 here]. | |||

Once bazel is downloaded and is installed, we can build the optimize_for_inference module as follows: | |||

<pre> | <pre> | ||

bazel build tensorflow/python/tools:optimize_for_inference | bazel build tensorflow/python/tools:optimize_for_inference | ||

</pre> | </pre> | ||

| Line 19: | Line 27: | ||

--input=/tmp/output_graph.pb \ | --input=/tmp/output_graph.pb \ | ||

--output=/tmp/optimized_graph.pb \ | --output=/tmp/optimized_graph.pb \ | ||

--input_names= | --input_names="Placeholder" \ | ||

--output_names=final_result | --output_names="final_result" | ||

</pre> | </pre> | ||

| Line 26: | Line 34: | ||

== Deploying the model == | == Deploying the model == | ||

Emgu.TF v1. | Emgu.TF v1.12 includes an InceptionObjectRecognition demo project. We can modify the project to use our custom trained model. | ||

We can either include the trained model with our application, or, in our case, upload the trained model to internet for the app to download it, to reduce the application size. We have uploaded our two trained model files to github, under the url: <pre> | We can either include the trained model with our application, or, in our case, upload the trained model to internet for the app to download it, to reduce the application size. We have uploaded our two trained model files to github, under the url: <pre> | ||

| Line 49: | Line 57: | ||

... | ... | ||

public MainForm() | |||

{ | |||

... | |||

inceptionGraph = new Inception(); | |||

inceptionGraph.OnDownloadProgressChanged += OnDownloadProgressChangedEventHandler; | |||

inceptionGraph.OnDownloadCompleted += onDownloadCompleted; | |||

inceptionGraph.Init( | |||

new string[] {"optimized_graph.pb", "output_labels.txt"}, | |||

"https://github.com/emgucv/models/raw/master/inception_flower_retrain/", | |||

"Placeholder", | |||

"final_result"); | |||

... | |||

} | |||

public void Recognize(String fileName) | public void Recognize(String fileName) | ||

{ | { | ||

Tensor imageTensor = ImageIO.ReadTensorFromImageFile<float>(fileName, 299, 299, 0.0f, 1.0f / 255.0f);; | |||

// | Inception.RecognitionResult result; | ||

if (_coldSession) | |||

{ | |||

//First run of the recognition graph, here we will compile the graph and initialize the session | |||

//This is expected to take much longer time than consecutive runs. | |||

result = inceptionGraph.MostLikely(imageTensor); | |||

_coldSession = false; | |||

} | |||

// | //Here we are trying to time the execution of the graph after it is loaded | ||

//If we are not interest in the performance, we can skip the following 3 lines | |||

Stopwatch sw = Stopwatch.StartNew(); | |||

result = inceptionGraph.MostLikely(imageTensor); | |||

sw.Stop(); | |||

String resStr = String.Empty; | |||

if (result != null) | |||

resStr = String.Format( | |||

"Object is {0} with {1}% probability. Recognition done in {2} in {3} milliseconds.", | |||

result.Label, | |||

result.Probability * 100, | |||

TfInvoke.IsGoogleCudaEnabled ? "GPU" : "CPU", sw.ElapsedMilliseconds); | |||

if (InvokeRequired) | |||

if ( | |||

{ | { | ||

this.Invoke((MethodInvoker)(() => | |||

{ | { | ||

fileNameTextBox.Text = fileName; | |||

pictureBox.ImageLocation = fileName; | |||

messageLabel.Text = resStr; | |||

})); | |||

} | |||

else | |||

{ | |||

fileNameTextBox.Text = fileName; | |||

pictureBox.ImageLocation = fileName; | |||

messageLabel.Text = resStr; | |||

} | } | ||

} | } | ||

| Line 96: | Line 127: | ||

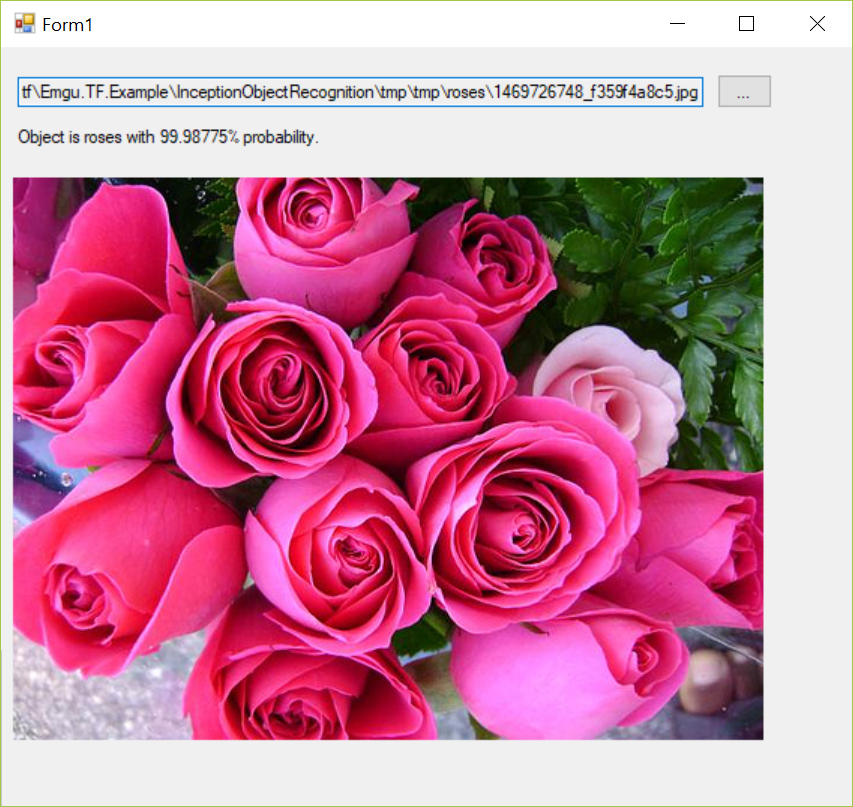

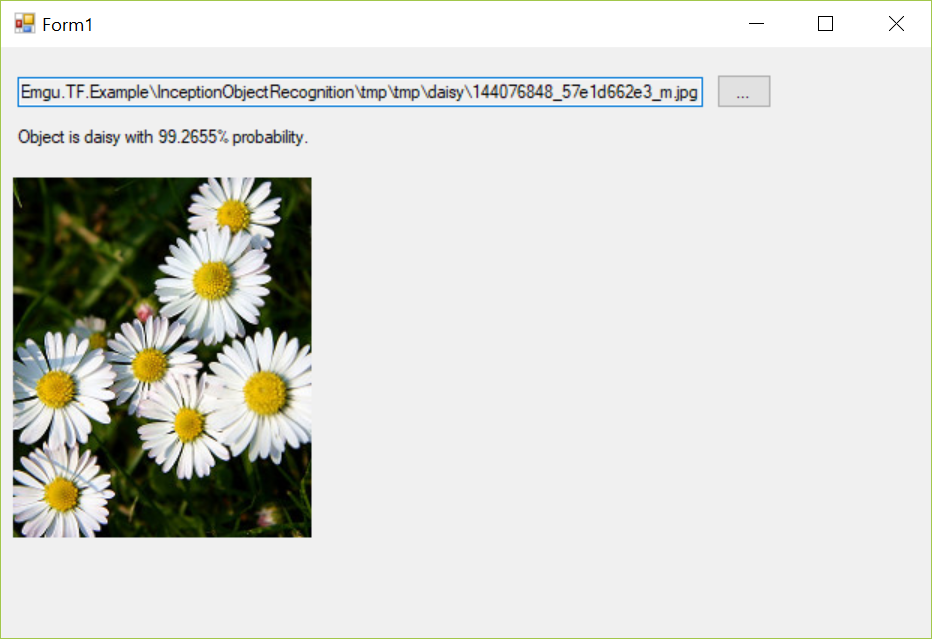

We run our inception demo program, and voila | We run our inception demo program, and voila | ||

[[File:flower_recognition_daisy_1.png]] | [[File:flower_recognition_daisy_1.png]] | ||

[[File:flower_recognition_rose_1.png]] | |||

==Converting to tensorflow lite model== | |||

We can convert the flower model to tensor flow lite, such that we can run the inception model on mobile devices. | |||

<pre> | |||

IMAGE_SIZE=299 | |||

tflite_convert \ | |||

--graph_def_file=/tmp/optimized_graph.pb \ | |||

--output_file=/tmp/optimized_graph.tflite \ | |||

--input_format=TENSORFLOW_GRAPHDEF \ | |||

--output_format=TFLITE \ | |||

--input_shape=1,${IMAGE_SIZE},${IMAGE_SIZE},3 \ | |||

--input_array=Placeholder \ | |||

--output_array=final_result \ | |||

--inference_type=FLOAT \ | |||

--input_data_type=FLOAT | |||

</pre> | |||

This will produce a <code>/tmp/optimized_graph.lite</code> file which is a tensorflow lite model. | |||

Latest revision as of 17:36, 14 October 2021

Training your custom inception model

This tutorial is based on Tensorflow v1.12 and Emgu TF v1.12.

Follow this tensorflow tutorial to retrain a new inception model.

Note: The above link has been removed from tensorflow website and the new tutorial based on Tensorflow v2.x is available here. If you follow Tensorflow v2.x tutorial you will have a "saved_model" format that will need to be loaded differently than the tutorial code below.

You can use the flower data from the tutorial, or you can create your own training data by replacing the data folder structures with your own. If you follows the tutorial for retraining, you should now have two files:

/tmp/output_graph.pb and /tmp/output_labels.txt

Optimize the graph for inference

We would like to optimized the inception graph for inference.

First we need to install bazel. Looking at this page we know that the official tensorflow 1.12.0 is built with bazel 0.15.0. We can download the bazel 0.15.0 release here.

Once bazel is downloaded and is installed, we can build the optimize_for_inference module as follows:

bazel build tensorflow/python/tools:optimize_for_inference

Now we optimized our graph

bazel-bin/tensorflow/python/tools/optimize_for_inference \ --input=/tmp/output_graph.pb \ --output=/tmp/optimized_graph.pb \ --input_names="Placeholder" \ --output_names="final_result"

An inference optimized graph optimized_graph.pb will be generated. We can use it along with the output_lablels.txt file to recognize flowers.

Deploying the model

Emgu.TF v1.12 includes an InceptionObjectRecognition demo project. We can modify the project to use our custom trained model.

We can either include the trained model with our application, or, in our case, upload the trained model to internet for the app to download it, to reduce the application size. We have uploaded our two trained model files to github, under the url:

https://github.com/emgucv/models/raw/master/inception_flower_retrain/

.

Source Code

We comment out the code that download the standard inception v3 model, and uncomment the code that use our custom trained model to recognize flowers.

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using Emgu.TF;

using Emgu.TF.Models;

...

public MainForm()

{

...

inceptionGraph = new Inception();

inceptionGraph.OnDownloadProgressChanged += OnDownloadProgressChangedEventHandler;

inceptionGraph.OnDownloadCompleted += onDownloadCompleted;

inceptionGraph.Init(

new string[] {"optimized_graph.pb", "output_labels.txt"},

"https://github.com/emgucv/models/raw/master/inception_flower_retrain/",

"Placeholder",

"final_result");

...

}

public void Recognize(String fileName)

{

Tensor imageTensor = ImageIO.ReadTensorFromImageFile<float>(fileName, 299, 299, 0.0f, 1.0f / 255.0f);;

Inception.RecognitionResult result;

if (_coldSession)

{

//First run of the recognition graph, here we will compile the graph and initialize the session

//This is expected to take much longer time than consecutive runs.

result = inceptionGraph.MostLikely(imageTensor);

_coldSession = false;

}

//Here we are trying to time the execution of the graph after it is loaded

//If we are not interest in the performance, we can skip the following 3 lines

Stopwatch sw = Stopwatch.StartNew();

result = inceptionGraph.MostLikely(imageTensor);

sw.Stop();

String resStr = String.Empty;

if (result != null)

resStr = String.Format(

"Object is {0} with {1}% probability. Recognition done in {2} in {3} milliseconds.",

result.Label,

result.Probability * 100,

TfInvoke.IsGoogleCudaEnabled ? "GPU" : "CPU", sw.ElapsedMilliseconds);

if (InvokeRequired)

{

this.Invoke((MethodInvoker)(() =>

{

fileNameTextBox.Text = fileName;

pictureBox.ImageLocation = fileName;

messageLabel.Text = resStr;

}));

}

else

{

fileNameTextBox.Text = fileName;

pictureBox.ImageLocation = fileName;

messageLabel.Text = resStr;

}

}

...Results

We run our inception demo program, and voila

Converting to tensorflow lite model

We can convert the flower model to tensor flow lite, such that we can run the inception model on mobile devices.

IMAGE_SIZE=299

tflite_convert \

--graph_def_file=/tmp/optimized_graph.pb \

--output_file=/tmp/optimized_graph.tflite \

--input_format=TENSORFLOW_GRAPHDEF \

--output_format=TFLITE \

--input_shape=1,${IMAGE_SIZE},${IMAGE_SIZE},3 \

--input_array=Placeholder \

--output_array=final_result \

--inference_type=FLOAT \

--input_data_type=FLOAT

This will produce a /tmp/optimized_graph.lite file which is a tensorflow lite model.